A few months ago, I wrote decoders for the PCM formats used by the Sega CD and Sega Saturn versions of the game Lunar: Eternal Blue1. I wanted to write up a few notes on the format of the Sega CD version’s uncompressed PCM format, and some interesting lessons I learned from it.

All files in this format run at a sample rate of 16282Hz. This unusual number is based on the base clock of the PCM chip. Similarly, the reason that samples are 8-bit signed instead of 16-bit is because this is the Sega CD PCM chip’s native format.

Each file is divided into a set of fixed-size frames; the header constitutes the first frame, and every group of samples constitutes the subsequent frames. These frames are set to a size of 2048 bytes—chosen because this is the exact size of a Mode 1 CD-ROM sector, and hence the most convenient chunk of data which can be read from a disc when streaming. This is also why the header takes up 2048 bytes, when the actual data stored within it uses fewer than 16 bytes.

Each frame of content contains 1024 samples. Because each sample takes up one byte in the original format, this means only half of a frame is used for content. The actual content is interleaved with 0 bytes. Despite seeming a pointless, inefficient use of space, this does serve a purpose by allowing for oversampling. The PCM chip’s datasheet lists a maximum frequency of 19800Hz, but this trick allows for pseudo-32564Hz playback.

The format used in Lunar: Eternal Blue supports stereo playback, though only two songs are actually encoded in stereo2. Songs encoded in stereo interleave their content not every sample, as in most PCM formats, but every frame; one 2048-byte frame will contain 1024 left samples, the next frame will contain the matching 1024 right samples, and so forth. This was likely chosen because the PCM chip expects samples for only one output channel at a time, and doesn’t itself implement stereo support.

The loop format used is highly idiosyncratic. Loop starts are measured in bytes from the beginning of the header; that is, a position to seek to from the start of actual content. The loop end time, however, uses a different unit; loop end is measured in terms of the sample at which the loop ends, not the byte. Because one sample is stored for every two bytes, and because left/right samples in a stereo pair are counted numerically as the same sample, this means that this count differs from the byte position at the end of the song by a factor of either 2 or 4. This is a quirk of how the PCM playback routine works; it’s more efficient to keep track of the number of samples played instead of the bytes played, and therefore storing the data in that format means that no extra math has to be performed to determine if the end of a loop has been reached. Similarly, the treatment of left/right samples as being the same sample is likely an artifact of what was the simplest way for the PCM playback code to handle this condition.

Looking at these PCM files gave me a new appreciation for design, and helped me appreciate more how important it is to understand the reason behind design decisions. In a lot of ways this format feels like it should be “bad” design: it’s strange, it’s idiosyncratic, it’s internally inconsistent. But every single detail is carefully chosen; everything serves a purpose. Each of these idiosyncrasies was carefully chosen to solve a particular problem, or to ensure peak performance in a particular bottleneck. Given the restrictions of a 16-bit game console, all of these choices were necessary to be able to support constantly streaming audio like this in the first place. Aligning every single detail of a format or API with the job which needs to be done is its own kind of elegance—a kind of elegance I understand a little better now.

-

I referenced a couple of open-source decoders for the Sega CD version’s format when writing my own (foo_lunar2 by kode54, and vgmstream), along with some notes given to me by kode54.↩

-

The Pentagulia theme, and the sunken tower.↩

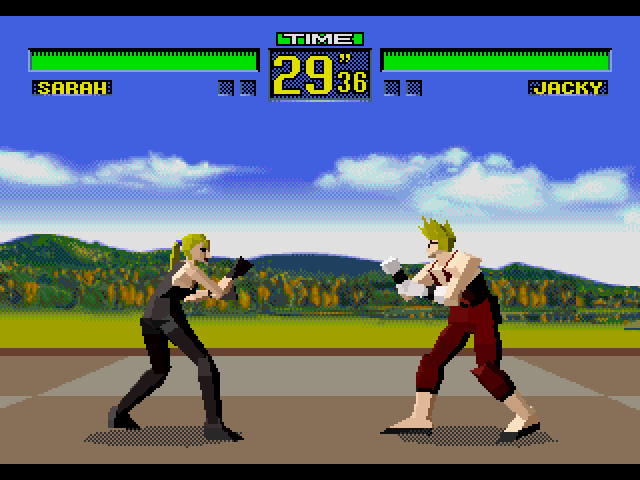

In 1999, an unofficial mod titled

In 1999, an unofficial mod titled

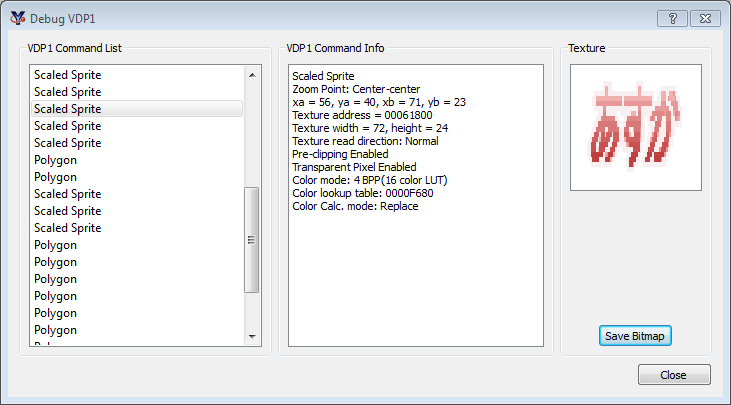

Via trial and error, and with help from information gleaned via the

Via trial and error, and with help from information gleaned via the